Laura Carpenter

San Jose State University, MLIS program

Information 289: Advanced Topics in Library and Information Science (E-Portfolio)

E-portfolio advisor: Dr. Joni Richards Bodart

November 15, 2021

Competency N:

Evaluate programs and services using measurable criteria.

Section 1 of 4:

An Introduction to What Competency N Means to Me and to Library and Information Science

Background Knowledge:

Evaluating programs and services using measurable criteria is important to information science so that data can be recorded, organized, and used to create attainable action steps to continuously improve services and programs. When evaluations are used to create detailed, specific, action steps, the design of information services and programs improves. There are many different evaluative tools and strategies available to use. Some of the measurable criteria tools that I like are:

-The Wisconsin Department of Public Instruction provides an excellent guide to evaluating services for inclusivity.

-StudyLib provides an excellent rubric for assessing library services. Like most evaluative tools, it can be customized to specific users and goals.

-Connected Learning Alliance provides data collection tools such as documentation strategies and surveys to use when evaluating programs and services.

-The Colorado Department of Education provides an extremely detailed rubric format meant for evaluating teacher-librarians, but this detailed rubric structure can easily be modified by the user to effectively measure and evaluate different data sets, such as a service or program.

Why Competency N is Important to Me as a Professional:

Issues of equity, diversity, and inclusion are at the heart of my approach to library and information science. Evaluating programs and services with measurable criteria provides a valuable opportunity for information professionals to better serve peoples who have historically been excluded. An important element of evaluating for inclusion, diversity, and equity is to open crucial dialogs between information professionals and their patrons, clients, communities, and staff. I find an interesting framework to use when approaching measurable criteria for issues of EDI is to start with the Bechdel test, which many are familiar with, but also the Aila test, which many are not yet familiar with.

The Bechdel test evaluates movies for their representation of womxn by asking 1) does the film have at least two named female characters? And 2) do those characters have at least one conversation that is not about a man? (Hickey et al., 2017). The Aila test is designed to evaluate the representation of Indigenous womxn in media by asking “1) Is she an Indigenous/Aboriginal woman who is a main character; (2) who does not fall in love with a white man; (3) and does not end up raped or murdered at any point in the story?” (Vassar, 2020, Interview section). The goal of both of these measurable criteria sets is to ascertain if a historically excluded person is being portrayed as an autonomous human being, or as a stereotype meant to further the narrative of a more privileged demographic. These tests were created because most media failed to represent these demographics according to the very simple criteria set forth.

When evaluating programs and services for issues of EDI, using the mindset of tests like Bechdel and Aila is beneficial. When evaluating programs and services, it is important to ascertain if historically excluded peoples are being included, portrayed, and welcomed as autonomous human beings, or if programs and services are catering to stereotypical information and representation, and are instead meant to serve the narrative of a more privileged demographic.

Why Competency N is Important to the Profession as a Whole:

When information professionals understand that historically excluded peoples have been provided with such extensive mis-portrayals of themselves, we can all work to create measurable criteria that evaluate programs and services with the goal of providing inclusive, diverse, equitable, informed, and accurate levels of service. When we use measurable criteria to evaluate programs and services, we create concrete data for our profession to use in our quest to continuously adapt our services and programs to the shifting needs and wants of our patrons, clients, and staff. Using rubrics designed to evaluate programs and services as a whole as well as using rubrics designed to evaluate them for specific elements (such as EDI) is an excellent way to create evaluative data. Using the data to create better levels of service, following up with more evaluative criteria at designated intervals, and seeking feedback on changes continuously allows our profession to excel at and become leaders in the field of relevant and important service and programming options. Failing to do so leads to irrelevant and problematic service and programming standards.

What Excellence in Competency N Looks Like to Me:

When I think of information professionals who are excelling in evaluating programs and services using measurable criteria, I see several strong patterns emerge. I see librarians researching current and emerging evaluation tools to find helpful references and options. I see them customizing rubrics, creating new and highly relevant measurable criteria, and using these criteria to evaluate their programs and services. I see detailed record keeping, written proposals to create and implement beneficial changes, follow up evaluations over extended time frames, community meetings to host discussions on what patrons want, would like to see, don’t want, and feedback on implemented changes. Most importantly, I see information professionals making the active decision to create positive change and to put in the work it takes to do so. To me, making that active decision and following through is key. It can be hard to collect and see data and feedback that provide constructive criticism on services and programs that a professional has maybe designed themselves. It can be hard to hear criticism and negative feedback from the community. Yet information professionals who choose to persevere because they believe that their patrons, clients, communities, and staff deserve better options, deserve wonderful options, truly embody excellence in using measurable criteria to provide outstanding service levels.

Section 2 of 4:

The Discussion of My Evidence

As my “Evidence A”, I am presenting the assessment tools that I developed and built into my Children's Librarian's 12-Month Programming Plan, as well as my Early Childhood Literacy plan (which is still in progress).

How and Why I Created Evidence A:

For my “Information 260A: Programming and Services for Children” course, I was tasked with creating a year’s worth of programming for a children’s librarian. Part of the programming plan must include a section describing how I planned to do assessment on my programs, how I would implement the evaluations of programs and services, and how I would convey the evaluative results.

The Process of Creating Evidence A:

When I was deciding how I wanted to approach creating my own measurable criteria, I knew that I wanted to focus largely on issues of equity, diversity, and inclusion. I thought about previous library programming and services I had created and moderated, as well as the information that I was being exposed to in my courses and the focus of many of my assignments. There was a correlating theme throughout all of these resources of having discussion with the community, evaluating accessibility issues, and providing relevant services.

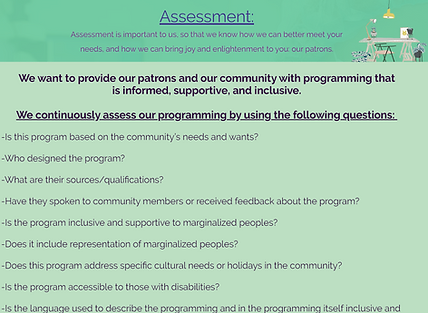

I decided to frame my evaluative assessment tools as if I were presenting them to the community, patrons, and clients to whom they affected. These evaluative criteria could be applied to any program wanting to be evaluated for supportive inclusivity to patrons. I then listed in Evidence the evaluative questions I developed which would be asked of all services and programs provided. The questions I developed are: Is this program based on the community’s needs and wants? Who designed the program? What are their sources/qualifications? Have they spoken to community members or received feedback about the program? Is the program inclusive and supportive to marginalized peoples? Does it include representation of marginalized peoples? Does this program address specific cultural needs or holidays in the community? Is the program accessible to those with disabilities? Is the language used to describe the programming and in the programming itself inclusive and supportive? Is there signage or decor present in the children’s area or places where the programs are to be held that promotes inclusivity, support, and acceptance of all peoples?

I then decided that in order to continue working to embody the message that these evaluative criteria are meant to provide what the community wants and needs, I would include a statement encouraging input, feedback, and suggestions, with a designated way to communicate directly with the evaluator. I then chose to move on to a section providing transparency on how I will implement these evaluative criteria. I chose to create a form, listing the criteria/questions that each program and service would be evaluated on. I then explained that I would sit in, silently in the back, on each program held during a designated evaluative week, evaluating them with the form and answering each question with a short answer. I would also provide surveys near the door that program participants would be encouraged to take and fill out, ascertaining their perceptions and satisfaction with the programming, as well as asking for suggestions for future programming they would like to see.

The survey will also ask if participants would be interested in participating in a focus group, to help better understand community wants and needs for programming and services. Participants interested in the focus group would be invited to participate. The focus group would be hosted by myself, and other interested staff, to discuss the community's programming needs and wants. I would use the results of the assessments to seek informative and instructional data in order to provide concrete information and next steps to reach an even higher level of supportive and inclusive services for patrons.

Creating Evidence A was the first time I had created my own assessment tools. Now in the last semester of the MLIS program, if I were to go back to update these tools I would make significant changes. I would first structure my assessment questions into a rubric format instead of a list. I would then have designated spaces to rank each criteria on different levels of achievement (Excellent, Good, Poor, Unachieved). This would make my criteria easier to measure over time, rather than having to interpret short answers. I would also update my terminology to use the more supportive descriptor of “historically excluded peoples” instead of “marginalized”.

Why I Chose to Feature Evidence A and How Evidence A Shows Competency:

Evidence A showcases my ability to think critically about important criteria that should be evaluated in programming and service offerings. An example of how Evidence A does this is through providing important criteria such as considering what the person who created the program's qualifications are. Evidence A also demonstrates my ability to implement a holistic and thorough evaluative strategy. Evidence A does this through presenting the measurable criteria, having a target audience of affected community members, asking for direct feedback, providing a direct communication portal, providing the form I will use to evaluate services, describing the strategy I will use to do so, incorporating surveys and focus groups with the community, and describing how assessment results will be organized, conveyed, and utilized.

My ability to critically evaluate my own assessment tools shows a willingness to embrace constructive criticism and to continuously adapt not only programs and services, but my own evaluative criteria and tools as well. I acknowledge that as my expertise has grown, I would reformat my tools and criteria to be a rubric instead of a list. Evidence A demonstrates that my goal as an information professional is not to add a feather in my own cap or to provide lip service to an ideal, but to genuinely strive to provide services and programming that are constantly evolving to better service my community. Evidence A also shows my strong and consistent focus on incorporating community dialog into the evaluative criteria.

Section 3 of 4:

How Creating the Evidence Helped Me Gain Expertise in Competency N

How Creating Evidence A Helped Me Gain Competency in the Area of N:

Creating Evidence A provided me with the opportunity to sit down and really think about what I felt was most crucial to incorporate into evaluative criteria for programs and services. As with most of my graduate work, the criteria centered around a simple question: does the element in question work to dismantle systems of oppression or does it work to maintain a status quo of oppression? While the question is straightforward, the answer requires knowledge of the more detailed nuances of oppression, a willingness to examine all components under a microscope, the ability to not only welcome but seek out constructive criticism, the input, feedback, and dialog of historically excluded peoples, and constant evaluation in order to continuously evolve.

Creating Evidence A allowed me to examine the concept of measurable criteria from the inside out, to take it apart and put it back together like a car engine or a puzzle. Choosing to create my own assessment tool instead of adapting an existing method allowed me to experiment with trial and error, which is an important learning style for me. Evidence A also allowed me to create not only a list of measurable criteria, but an entire, holistic, implementation plan spanning across forms, short answers, observation, surveys, focus groups, and presentations.

How Creating Evidence A Changed My Way of Thinking on Competency N:

Before creating Evidence A, I understood the importance of evaluating programs and services to ensure goals were being met, but the details and nuance of measurable criteria were not something I had as firm a grasp on. In creating Evidence A, I came to learn that measurable criteria could be molded and adapted to evaluate for strategic purposes (such as EDI). Evidence A became an assignment that I went back to time and time again throughout the MLIS program. Even after the assignment and course were completed, I thought about what I would change in my assessment tools and why I would make those changes. While I agree with the questions I developed, I now understand that they would be more effective and measurable as a rubric with ranked levels. I hope to eventually go in and adapt my assessment tools into a rubric formatting. This will allow me to use my evaluations to better improve the design of programs and services. A rubric allows for easier processing of the information: a category is ranked as A, B, or C. Over time and numerous evaluations, a type of graph emerges showing the criteria’s improvement, decline, or maintenance at a steady level. Using that data, needed improvements can be made.

Coursework That Has Prepared Me for Competency N:

In my “Information 260A: Programming and Services for Children” course, I created detailed templates for baby, toddler, and all-ages storytimes. The templates take measurable early childhood literacy criteria and pair each element with specific books, activities, and storytime elements that support those criteria. In my “Information 204: Information Professions” course, I completed a SWOT (strengths, weaknesses, opportunities, threats) analysis which allowed me to virtually evaluate a public library using measurable criteria (staff skills, programming, budget, community relations, employee retention, accessibility, remote options). I also completed a budget reduction after evaluating a fictional library branch’s financial needs, budgetary restrictions, and available resources. I was able to complete a Management Response Paper addressing a fictional scenario at a public library branch in which I evaluated a problem (patron complaints), the situational background, created an analysis based on my evaluations, and used these evaluations to present solutions to improve the library’s services and response to the issue.

In my “Information 237: School Library Media Materials” course, I completed a Qualitative Rubric for Text Analysis, which presents my evaluation of Speak by Laurie Halse Anderson based on extensively detailed measurable criteria when working to teach the novel to 9th grade students. I completed four separate Self-Evaluation Rubric Tools after major projects. These evaluative rubrics were completed with the goal of working to notice patterns in provided service levels and to determine areas for improvement.

How I Have Changed From the Person I Was Before These Courses, to the Person After, to the Person I Am Now:

Before I took these courses and completed these assignments, I thought of evaluation as a qualitative endeavor. This can be seen in how I originally formatted my own assessment tools: a list of short answer questions. After extensive coursework and course materials regarding measurable criteria, I now see the evaluation of programs and services as something that benefits significantly from quantitative data as well. The questions I created are important, but being able to measure their effectiveness is better suited to rubric formatting that will provide measurable rankings. Those rankings can be more easily analyzed over time and across numerous evaluations. I feel that both qualitative and quantitative approaches are important when evaluating programs and services. Using only one approach deprives the results of substance and of relevance. I now know that evaluating programs and services with measurable criteria is an endeavor in which qualitative evaluation and quantitative evaluation working together provide the best method for improvements.

What I Have Learned:

I have learned that evaluating programs and services is best achieved by using specific, measurable, and well-organized criteria. I have learned this through creating my own assessment tool as a list of questions and later realizing that that format was going to be more difficult to use when interpreting results. Having data organized and presented in rubric or easy-to-rank formatting provides important information in an easier to interpret format, especially over time. I learned this by researching and observing the evaluative tools that others had created and by completing numerous evaluative rubrics myself. Evaluating programs and services in a measured way and using that detailed data to assess existing programs and services, potential programs and services, and ways to constantly strive to bring even better options to those involved shows high-level program management skills.

Section 4 of 4:

How the Knowledge I Have Gained Will Influence Me in the Future, as an Information Professional

What I Bring to the Position, in Terms of Competency N:

I bring with me a comprehensive understanding of the importance of using measurable criteria when evaluating programs and services. I bring extensive knowledge of how to best utilize evaluative strategy and how to implement wide-spanning evaluation programs. I bring the ability to assess specific needs and to customize evaluation plans and measurable criteria to specific situations. I bring with me the ability to acknowledge and recognize my mistakes, and to use the knowledge gained to create even better programs and services. I bring a genuine passion for providing my clients, patrons, and communities with continuously evolving services that meet their changing needs. I also bring a sense of humor and perspective, which is always helpful to have around.

How My Learning in Competency N Will Contribute to My Professional Competence in the Future:

I am an excellent evaluator of programs and services because I can assess specific evaluation needs and customize measurable criteria to utilize best practices for individual situations. I learned how to assess, evaluate, and modify measurable criteria by designing my own assessment tools (Evidence A), customized to evaluate issues of EDI in children’s public library programming. Designing assessment tools and criteria taught me to incorporate a holistic approach in my evaluations and to include a detailed implementation plan. My understanding of measurable criteria (including my drive to continuously improve my own evaluative techniques in order to continuously improve programs and services), and my ability to incorporate what I learn from the evaluative tools constructed by others, make me an outstanding candidate in the arena of evaluative services.

Illustration by Allie Brosh

Brosh, A. (2013). Hyperbole and a half: Unfortunate situations, flawed coping mechanisms, mayhem, and other things that happened. [Cover Art]. Touchstone Books.

"...I Should Have

Used a Rubric":

The Laura Carpenter Story

References:

Anderson, L.H., (1999). Speak. Farrar, Straus, Giroux.

Brosh, A. (2013). Hyperbole and a half: Unfortunate situations, flawed coping mechanisms, mayhem, and other things that happened. Touchstone Books.

Colorado Department of Education. (2016). Overarching goals of the highly effective school library program (HESLP) rubric. Highly Effective School Library Program. https://www.cde.state.co.us/cdelib/2016heslprogram

Hickey, W., Koeze, E., Dottle, R., & Wezerek, G. (2017, December 21). We pitted 50 movies against 12 new ways of measuring Hollywood’s gender imbalance. FiveThirtyEight. https://projects.fivethirtyeight.com/next-bechdel/

Jochem, M. & Brommer, S. (n.d.) Inclusive services assessment guide for WI public libraries. Wisconsin Department of Public Instruction. https://www.scls.info/sites/www.scls.info/files/documents/file-sets/122/inclusive-services-assessment-guide-wi-public-libraries-1.pdf

StudyLib. (2006). Library services assessment plan. https://studylib.net/doc/13011737/library-services-assessment-plan

Vassar, S. (2020, May 14). The ‘Aila Test’ evaluates representation of Indigenous women in media. High Country News. https://www.hcn.org/articles/indigenous-affairs-interview-the-aila-test-evaluates-representation-of-indigenous-women-in-media

Widman, S., Penuel, W., Allen, A., Wortman, A., Michalchik, V., Chang-Order, J., Podkul, T., & Braun, L. (2020). Evaluating library programming: A practical guide to collecting and analyzing data to improve or evaluate connected learning programs for youth in libraries. Connected Learning Alliance. https://clalliance.org/wp-content/uploads/2020/08/Evaluating-Library-Programming.pdf